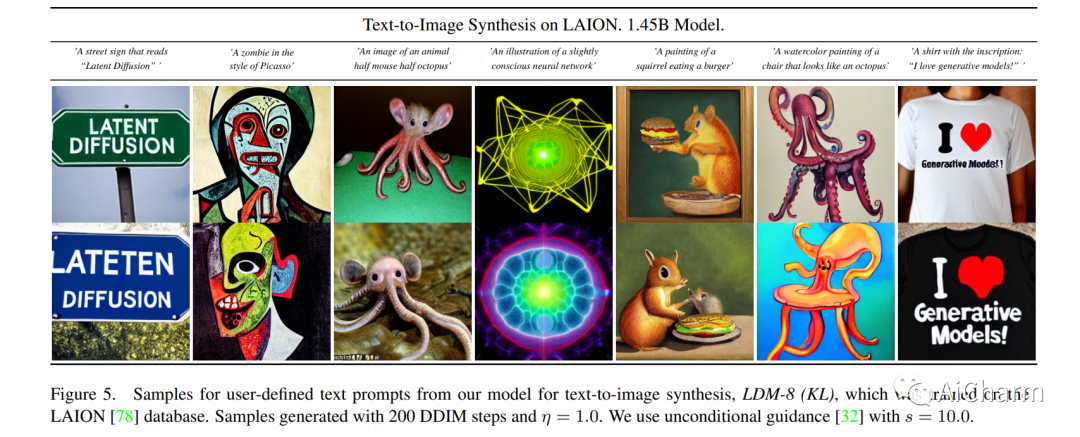

LAION, a German research organization known for creating datasets used in training generative AI models like Stable Diffusion, has released a new version of its dataset called Re-LAION-5B. This release is a cleaned version of the previous LAION-5B dataset, which had faced criticism for containing links to child sexual abuse material (CSAM) and other problematic content.

The new dataset, Re-LAION-5B, has been thoroughly cleaned to remove known links to CSAM. This effort was made in response to concerns raised by various organizations, including the Stanford Internet Observatory, which highlighted the presence of such content in the original dataset. The cleaning process involved removing 2,236 links that were identified as problematic , .

LAION's initiative to release a cleaned dataset sets a new safety standard for handling web-scale image-link datasets. This move comes at a time when governments worldwide are scrutinizing how technology is used to create or distribute illegal content, including child abuse imagery .

While the new dataset won't alter models that were previously trained on the original LAION-5B, it represents a significant step towards ensuring safer AI training practices. LAION's efforts to address these issues demonstrate a commitment to ethical AI development and the importance of maintaining clean and safe datasets for training AI models .

For more information, you can visit the official announcement by LAION .